The NASA Ames iPSC/860 log

| System: | 128-node iPSC/860 hypercube |

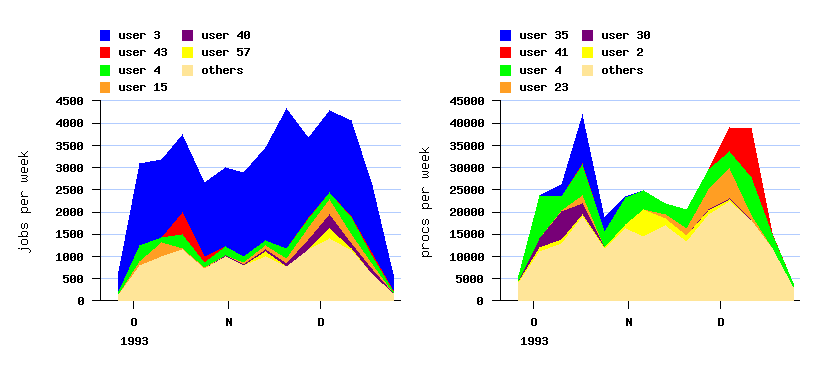

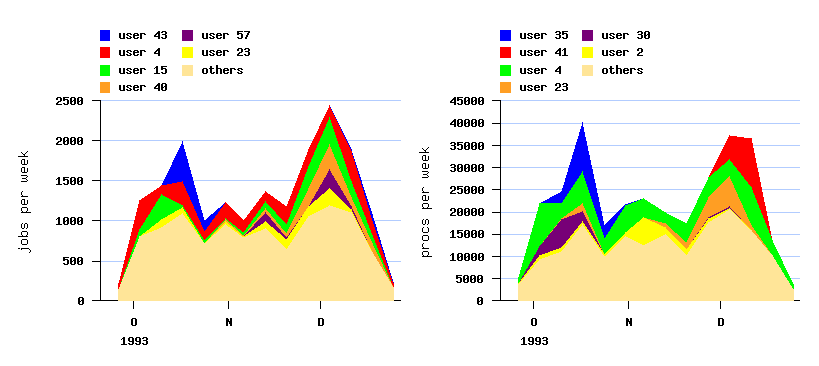

| Duration: | October 1993 thru December 1993 |

| Jobs: | 42050 total, 14794 user jobs |

This log contains three months worth of sanitized accounting

records for the 128-node iPSC/860 located in the Numerical Aerodynamic

Simulation (NAS) Systems Division at NASA Ames Research Center. The

NAS facility supports industry, acadamia, and government labs all

across the country. The workload on the iPSC/860 is a mix of

interactive and batch jobs (development and production) mainly

consisting of computational aeroscience applications. For more

information about NAS, see URL

http://www.nas.nasa.gov/.

This somewhat aged log has the distinction of being the first to be

analyzed in detail.

The results are described in a paper cited below.

It includes basic information about the number of nodes, runtime,

start time, user, and command.

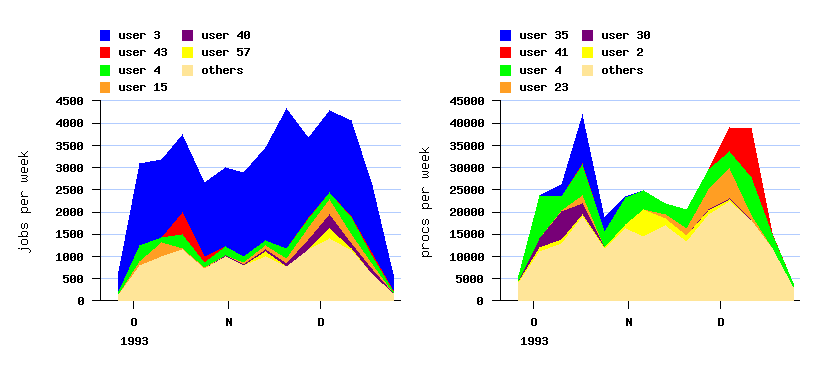

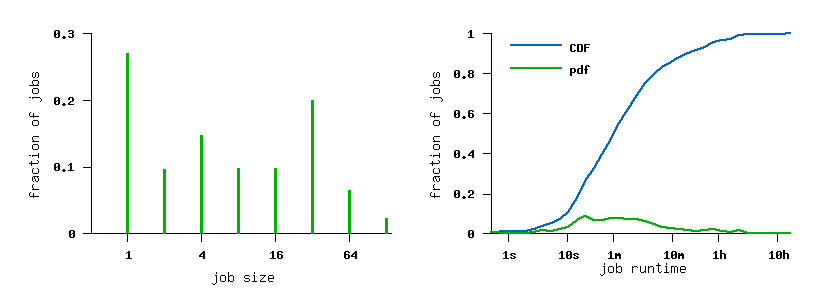

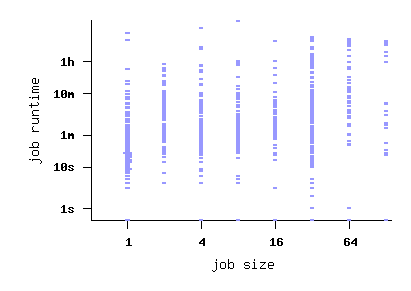

The number of nodes is limited to powers of two due to the architecture.

Note that the log does not include arrival

information, only start times.

The workload log from the NASA Ames iPSC/860 was graciously provided

by Bill Nitzberg, who also helped with background information and

interpretation.

If you use this log in your work, please use a similar acknowledgment.

You can also reference the following:

D. G. Feitelson and B. Nitzberg,

``Job

characteristics of a production parallel scientific workload on the

NASA Ames iPSC/860''.

In Job Scheduling Strategies for Parallel Processing,

D. G. Feitelson and L. Rudolph (Eds.), Springer-Verlag, 1995,

Lect. Notes Comput. Sci. vol. 949, pp. 337-360.

Downloads:

(May need to click with right mouse button to save to disk)

|

|

System Environment

The iPSC/860 machine located at NASA Ames was a 128-node hypercube.

At the time it was the workhorse of the NAS facility for scientific

computations (it has since been decommisioned).

Up to 9 jobs could run on the system at the same time, by using

distinct subcubes.

Because jobs run on subcubes, job sizes are limited to powers of two.

The following summarizes the resource usage rules in effect during the

time covered by the log.

Batch jobs were handled by NQS, which was configured with the

following queues:

| Time limit | number of nodes |

| 16 | 32 | 64 | 128 |

| 0:20 | q16s*# | q32s*# | q64s# | q128s# |

| 1:00 | q16m* | q32m* | q64m | q128m |

| 3:00 | q16l | q32l | q64l | q128l |

"*" = active during prime-time ("*" is not part of the name)

"#"= active during weekend day

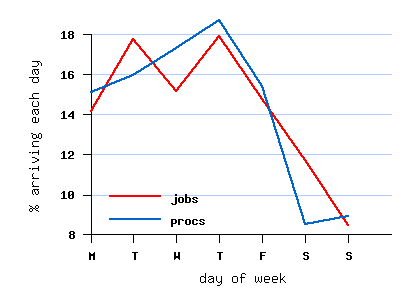

Prime time is defined as Monday to Friday 6:00 to 20:00 PST.

During this time, the running queues are q16s, q16m, q32s, and q32m.

NQS jobs can use no more than 64 nodes (the size of the batch

partition), and NQS will not kill interactive jobs.

The rest of the time is non-prime time.

At such times all queues are runnable, and NQS jobs can use the entire

cube.

Moreover, NQS will kill interactive jobs to make room for NQS jobs.

Log Format

The original log file in available as NASA-iPSC-1993-0.

This file contains one line per completed job with the following

white-space separated fields:

- User.

User names have been changed (sanitized) by text

substitution, e.g. user "nitzberg" was replaced by "develop7".

There are the following classes of users:

| root | root |

| sysadmin | Operations account |

| intel | Intel analysts |

| develop | NAS system development staff |

| support | NAS system support staff |

| user | Scientific users (including NAS researchers) |

- Job.

Job names have also been sanitized by text substitution.

Batch jobs are denoted by "nqs0", "nqs1", etc. It was not possible to

determine whether two batch jobs ran the same application, so each

batch job has it's own number. All other jobs were interactive

("cmd0", "cmd1", etc.). The names of common UNIX commands were not

sanitized: a.out, cat, cp, grep, ls, nsh, ps, pwd, rcp, and rm.

Note that the system operators automaticly ran the "pwd" command to

monitor system availabilty with high frequency, so there are thousands

of such jobs in the log.

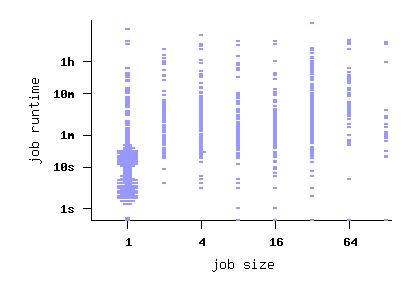

- Number of nodes.

The number of nodes is always a power of two, as jobs must run on a

subcube.

It can also be 0, which means that the job ran on the service node,

not on the hypercube.

- Run time.

This is the wall-clock running time of the entire job, in

seconds -- it is not the number of "node seconds".

- Start date.

- Start time.

This is Pacific time (Daylight or Standard depending on the day of the year).

The log also contains special entries about system status.

Again there is one line per entry:

"special" System Type Duration Start-Date Start-time Comments...

These entries are distinguished by the first word in the line, which

is "special".

"System" is nearly always "CUBE", referring to the iPSC/860.

"Type" is one of:

| D | Dedicated Time (reserved for exclusive use by a user or

sysadmin) |

| P | Preventative Maintenence |

| M | Scheduled Facility Outage |

| S | Software Failure |

| H | Hardware Failure |

| F | Unscheduled Facility Outage |

| O | Other |

Note that during dedicated time (type "D"), jobs may still be run.

Dedicated time is used to restrict access to selected users for a

period of time.

To be consistent with job entries, the special entries

gives "Duration" and "Start-time" to the nearest second. However,

all times were reported in minutes, and are only accurate within a few

minutes.

Conversion Notes

The converted log is available as NASA-iPSC-1993-3.swf.

The conversion from the original format to the standard workload

format is generally straightforward.

It was done subject to the following.

-

The log does not contain data about submit times.

The start times were therefore used in place of submit times.

-

All the different types of system personnel identified in the original

log were grouped into a single group (group 2).

-

During the conversion, all jobs with 0 nodes (meaning that they ran on

the service node) were deleted.

"Special" records were also deleted.

This is why the original log contains 43910 lines, but the SWF log

only has 42264 jobs.

-

The conversion loses the following data, that cannot be represented in

the SWF:

- The original log did not sanitize Unix commands.

In the converted log they are sanitized together with user applications.

-

The following anomalies were identified in the conversion:

- The application being run was missing in 1044 jobs.

The conversion was done by

a log-specific parser

in conjunction with a more general

converter module.

The difference between conversion 3 (reflected in

NASA-iPSC-1993-3.swf) and and conversion 2 (NASA-iPSC-1993-2.swf)

is that in the older conversion wait times were listed as 0.

In the new one this was changed to -1, as we actually do not know what

the wait times were (and what the original submit times were).

The differences between conversion 2 (reflected in NASA-iPSC-1993-2.swf)

and conversion 1 (NASA-iPSC-1993-1.swf) is that

in the original conversion timegm was used to convert dates and

ti mes into UTC.

This is wrong in case daylight saving time is used.

Conversion 2 used timelocal with the correct timezone setting, which

is hopefully the right thing to do.

Usage Notes

The original log contains 24,025 executions of the Unix pwd command on

1 node by sysadmin staff

(out of a total of 42,264 jobs, so this is 56.8% of the log; the

slightly different numbers that appear in the original paper are due

to the fact that the original analysis ignored all 0-time jobs, and

here they are included).

This reflects a practice by the system administrators to verify that

the system was up and responsive.

It is recommended to delete these jobs before using or analyzing this

log, as they do not reflect normal usage.

To aid in this, a cleaned version of the log is provided as

NASA-iPSC-1993-3.1-cln.swf.

The filter used to remove the spurious pwd jobs was

user=3 and application=1 and processors=1

Note that this filter was applied to the original log, and unfiltered

jobs remain untouched.

As a result, in the filtered log job numbering is not consecutive.

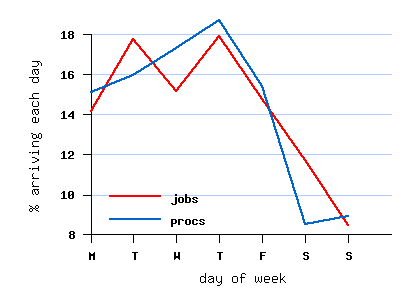

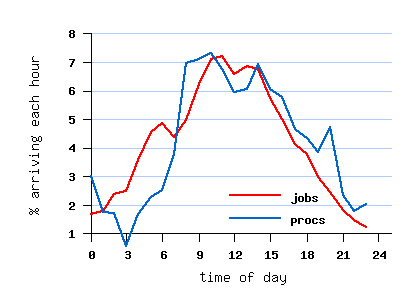

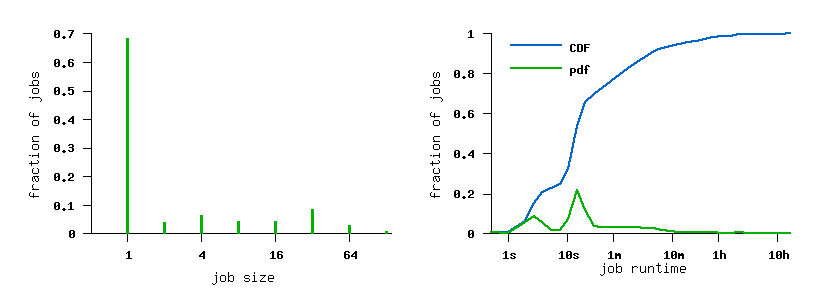

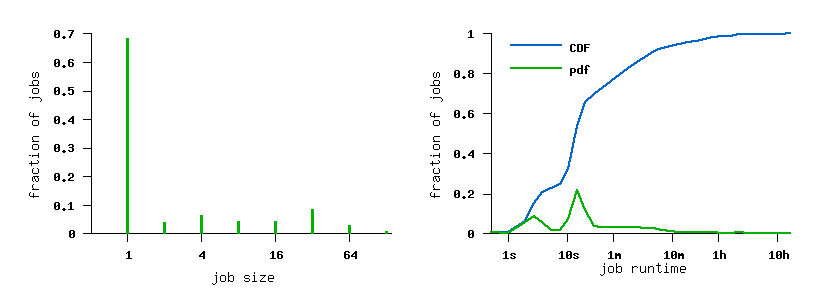

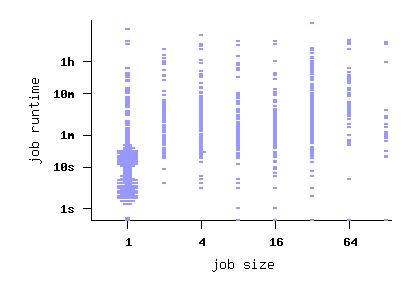

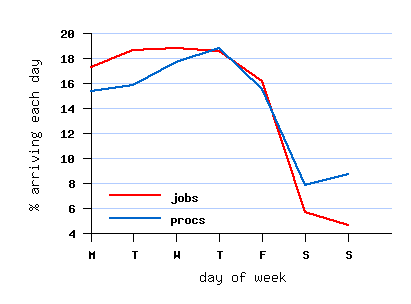

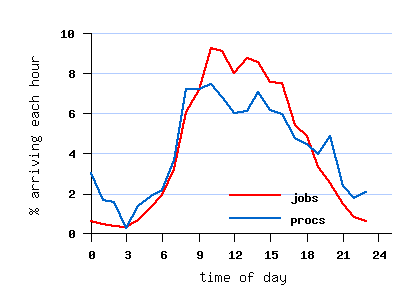

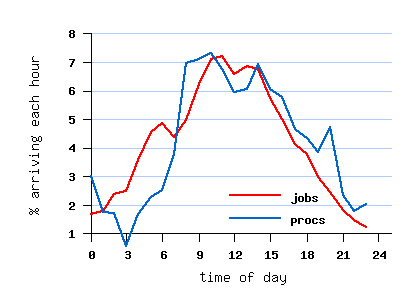

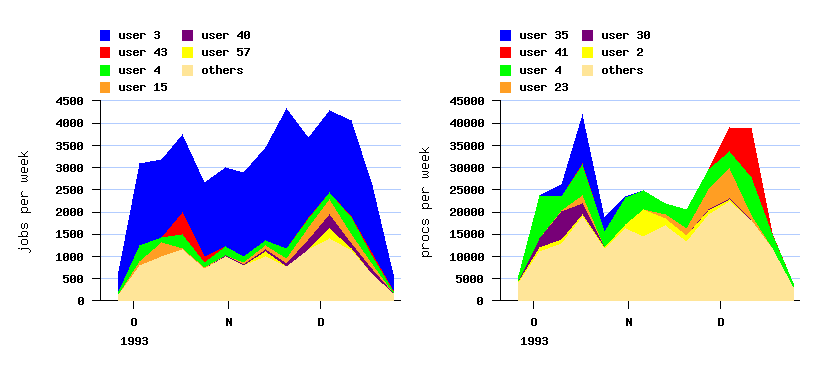

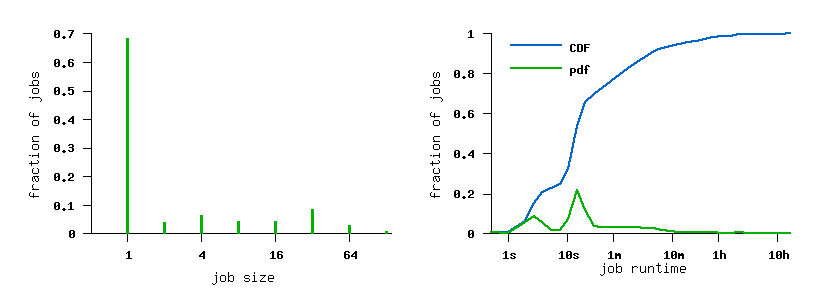

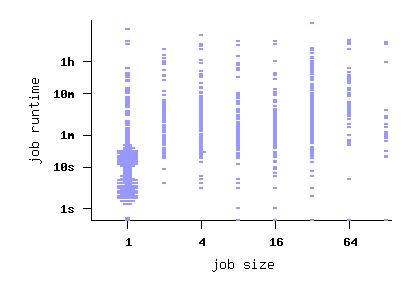

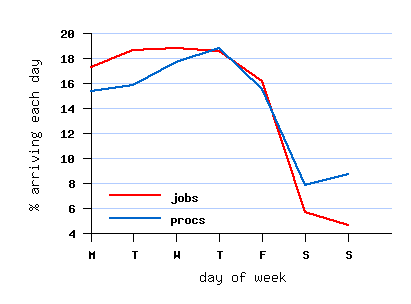

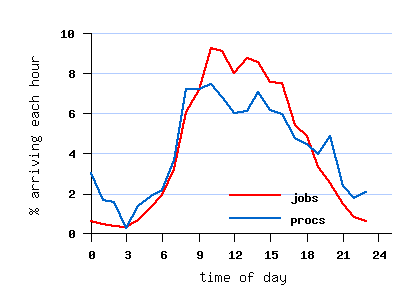

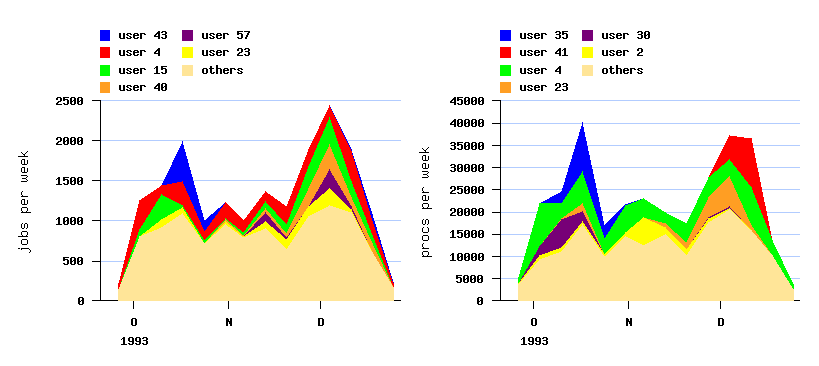

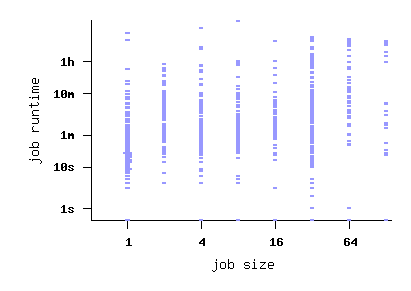

The Log in Graphics

File NASA-iPSC-1993-3.swf

File NASA-iPSC-1993-3.1-cln.swf

Parallel Workloads Archive - Logs